In less than a month, WWDC, Apple's Worldwide Developers Conference, will reveal the company's plans for the upcoming iOS 18 and iPadOS 18 software updates. But we already know what accessibility features will be hitting iPhones and iPads later this year, and many of them will be helpful to everyone, not just users with accessibility issues.

On May 15, Apple laid out its plans for new accessibility features, which will take advantage of advanced hardware and software on iPhone or iPad, such as Apple silicon, artificial intelligence, and machine learning, to power fresh usability tools for people with physical disabilities, conditions that affect speech, vision-related difficulties, and even sensitive tummies. But you don't have to have any of these conditions to be excited about all the accessibility tools listed below.

Developers will gain access to the iOS 18 and iPadOS 18 betas on June 10 — the first day of WWDC24 — and they'll have about three months to prepare their apps for the public release in the fall. That means some of the accessibility features below will be available in third-party apps, not just built-in apps and services.

1. Eye Tracking

Apple's most anticipated accessibility tool may be designed for users with physical disabilities, but it'll be available to anyone who wants to control their iPhone or iPad using only their eyes.

Eye Tracking uses your front-facing camera to set up, calibrate, and analyze your eye movements with on-device intelligence and no additional hardware or accessories. The data it collects is kept securely on your device, so you don't have to worry about anything being sent or intercepted in transit.

The upcoming feature will let you navigate any iOS or iPadOS app using your eye movements. It then relies on Dwell Control, an AssistiveTouch feature, to perform the action when you hold your eyes steady on a selected screen element or a specific area of the screen.

Dwell Control is traditionally used with a mouse or other pointer and assumes the user can't physically tap or click anything. When the user hovers over an element with the cursor for a set period of time, it simulates a tap and can even simulate swipes, physical buttons, and other gestures.

Eye Tracking is just one of Apple's many new artificial intelligence features coming to iOS 18 and iPadOS 18 later this year.

2. Music Haptics

With Music Haptics, deaf or hard-of-hearing users will be able to "listen" to music playing on their iPhone thanks to the device's Taptic Engine. When music plays on the iPhone, the engine produces taps, textures, and refined vibrations to the beat the user can feel. Apple says the feature works across millions of songs on Apple Music, and there will be a Music Haptics API so that app developers can also make music more accessible to their users.

Like Eye Tracking, Music Haptics is open to anyone, so it could add another layer to your music-listening experience.

3. Listen for Atypical Speech

Apple is improving Siri's speech recognition abilities with its upcoming Listen for Atypical Speech feature. This tool will help Siri understand users with cerebral palsy, amyotrophic lateral sclerosis (ALS), multiple sclerosis (MS), Parkinson's disease, traumatic brain injury (TBI), stroke, or other conditions that affect speech.

Listen for Atypical Speech uses artificial intelligence and on-device machine learning to recognize a user's speech patterns. While Siri is obvious to benefit from it, it could help in other areas of iOS and iPadOS, such as the Translate app, Voice Control, and Personal Voice.

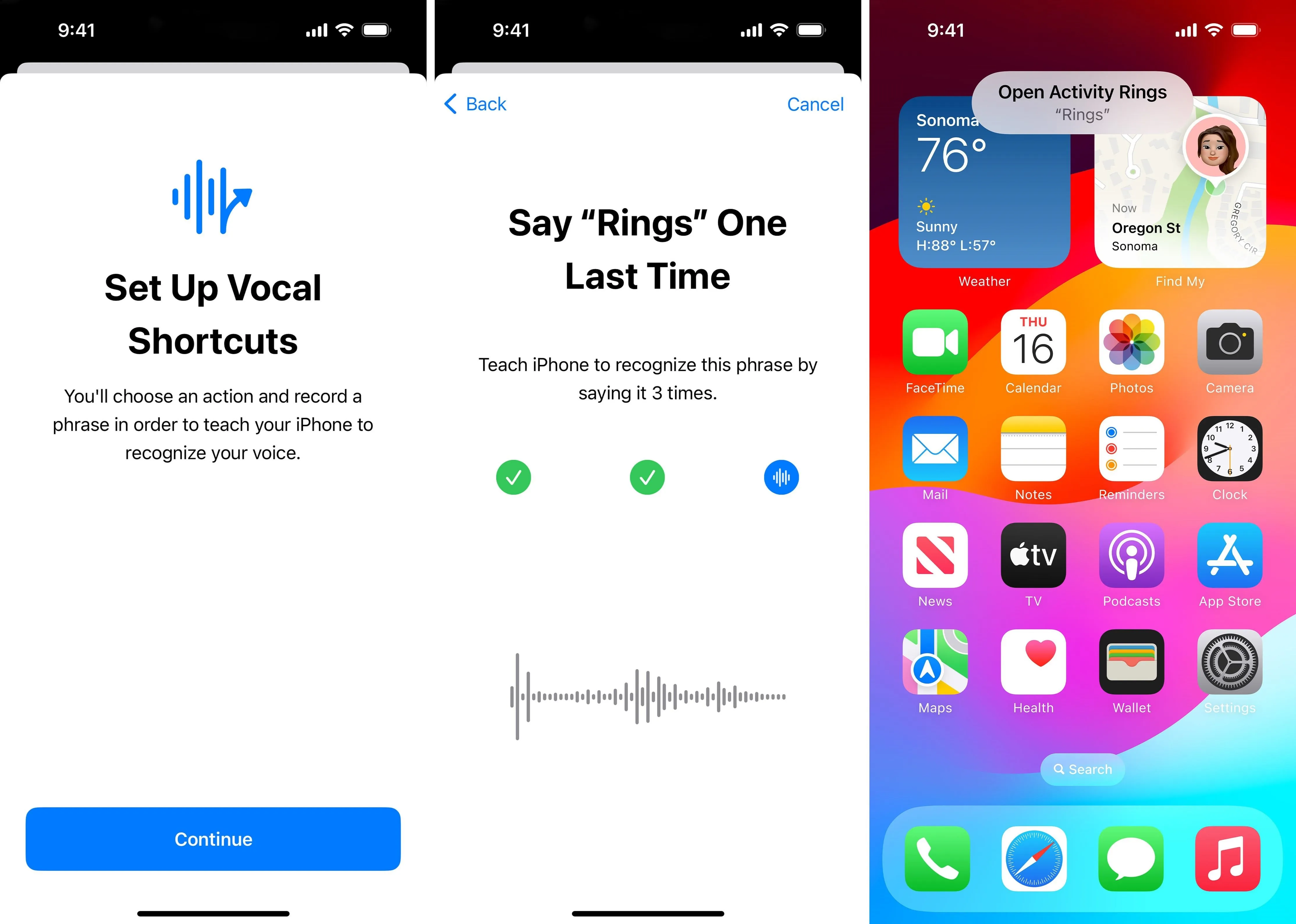

4. Vocal Shortcuts

Another area where Listen for Atypical Speech can help is with the upcoming Vocal Shortcuts accessibility feature. These shortcuts will let you assign custom spoken sounds and utterances to specific actions on your device. The available actions will likely be the same that are available via Back Tap and AssistiveTouch.

When you speak an assigned sound or utterance, Siri will understand and launch its designated shortcut, whether it's something as easy as scrolling down a page or a more complex task like starting multiple timers at once.

5. Vehicle Motion Cues

According to various medical sources, a massive chunk of the worldwide population is susceptible to motion sickness, with numbers ranging from 25% to 33% and even 65%. While those over 50 are generally less likely to get motion sick, practically anyone older than two years can become motion sick under sufficient stimulus.

If you get motion sickness easily when interacting with devices in movie vehicles, Apple's got your back. On the upcoming iOS 18 and iPadOS 18 software updates, you'll find an advanced accessibility feature called Vehicle Motion Cues. The feature can be set to turn on automatically when motion in a vehicle is detected, or you can switch it off and on in Control Center.

With Vehicle Motion Cues, animated dots on the edges of the screen represent changes in vehicle motion to help reduce sensory conflict without interfering with the main content. Using sensors built into iPhone and iPad, Vehicle Motion Cues recognizes when a user is in a moving vehicle and responds accordingly.

6. New Voices for VoiceOver

While we didn't see any examples, Apple says new voices will be available for VoiceOver later this year. There are currently 22 personalities available for VoiceOver in US English on iOS 17 and iPadOS 17, as well as 15 novelty voices and numerous other English variations.

7. Other VoiceOver Additions

Besides new voices, blind and low-vision users will be getting in VoiceOver a flexible Voice Rotor and custom volume control on iOS 18 and iPadOS 18. And on macOS 15, you'll also be able to customize VoiceOver keyboard shortcuts.

8. New Reader Mode in Magnifier

Magnifier, Apple's real-world magnifying glass for blind and low-vision users, will have a new Reader Mode for text seen in the frame.

Right now, Text Detection shows text visible in the frame over top of the real-world picture, and there's not much you can do with it beyond scrolling through it. On iOS 18 and iPadOS 18, you'll be able to turn off speaking and expand the text into Reader Mode to fill the entire display. For easier reading, you'll be able to change the background color, font, and font size.

9. Launch Detection Mode with Action Button

For those of you with an iPhone 15 Pro or 15 Pro Max, iOS 18 will add Magnifier's Detection Mode as an option for the Action button. Detection Mode houses the People Detection, Door Detection, Image Descriptions, Text Detection, and Point and Speak tools.

10. Braille Screen Improvements

According to Apple, Braille users will be getting four new features on iOS 18 and iPadOS 18, which includes:

- A new way to start and stay in Braille Screen Input for faster control and text editing.

- Japanese language availability for Braille Screen Input.

- Support for multi-line braille with Dot Pad.

- The option to choose different input and output tables.

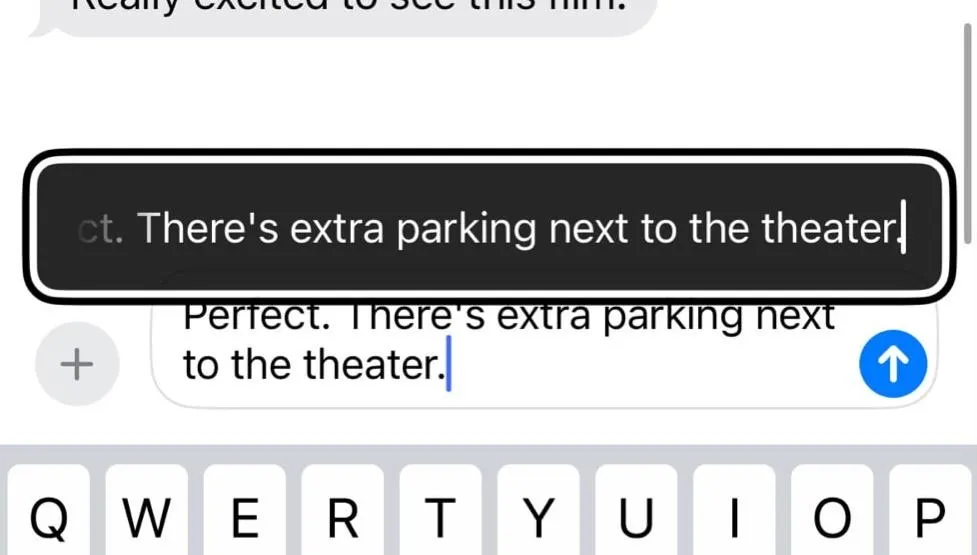

11. Hover Typing

Low-vision users are getting another new feature on iOS 18 and iPadOS 18 to help them read things better on the screen. With Hover Typing, you'll see larger text appear over the screen whenever you're typing in a text field, and it will help you focus on it and not what's being input into the text field by using a background color, font, and font color that works best for you.

12. Personal Voice Improvements

Personal Voice, introduced with iOS 17 and iPadOS 17, lets you set a simulated version of your real voice on your iPhone or iPad when using Live Speech, which speaks whatever you type in communication apps. Likely thanks to Listen for Atypical Speech, Personal Voice will now help users who have difficulty reading and pronouncing complete sentences during the initial training, allowing them to use shortened phrases to get the job done.

Mandarin Chinese support is also coming to Personal Voice.

13. Live Speech Categories

When using Live Speech, you can set common phrases to choose from so you don't have to type out the whole thing yourself every time. The upcoming iOS 18 and iPadOS 18 updates further equip Live Speech with categories, which presumedly allow you to create categories to house words and phrases for quicker access. You can also expect "simultaneous compatibility with Live Captions."

14. Virtual Trackpad for AssistiveTouch

Another interesting accessibility feature coming with iOS 18 and iPadOS 18 is Virtual Trackpad, a new feature for AssistiveTouch users. With it, you can control your iPhone or iPad using a small region on your screen, which acts as a resizable trackpad.

15. Finger-Tap Gestures in Switch Control

Switch Control for iPhone and iPad lets you use external accessories or native switches such as screen taps, head movements, back tap gestures, and sound actions to highlight screen elements and perform actions. With iOS 18 and iPadOS 18, you'll also be able to use finger-tap gestures in front of your camera as switches.

16. Enhanced Voice Control Vocabulary

Voice Control, which does exactly what it sounds like — lets you control your iPhone or iPad with your voice. You can use any of its built-in commands or create custom speakable phrases to perform actions. On iOS 18 and iPadOS 18, you'll also get support for custom vocabularies and complex words.

17. More Accessibility Options in CarPlay

If you have CarPlay in your vehicle, you'll be able to use Voice Control to navigate the interface and control apps using your voice. You'll also get Sound Recognition support, which can alert drivers and passengers who are deaf or have hearing difficulties to honking cars, approaching sirens, and other traffic-related noises.

Color Filters will also finally be supported. Color Filters can make CarPlay's UI easier to navigate for people who are color-blind or sensitive to light. And the UI will also gain support for Bold Text and Large Text adjustments.

Cover photo by Gadget Hacks; all other images via Apple

Comments

Be the first, drop a comment!